COLUNMS

Responsible AI - Are We Getting Ahead?

By Boris Bogatirev

The acceleration of the fourth industrial revolution paired with accessible and almost endless computing resources (as Gordon Moore had predicted) is shining a strong light on Artificial Intelligence (AI). With a plethora of movies, shows and books to feed our wild imagination on “how and when the machines will take over”, it is natural to talk about ethics and governance. Personal privacy is an ever-growing concern that continues to generate news and controversies on a daily basis. Margins are shrinking and commercial competition is growing stronger. All of these factors contribute to us relying more and more on automation to process vast amounts of data to produce these coveted insights that would help our organizations win. With any progress comes the natural question of what is the price that we are paying in exchange.

There are multiple questions that we should be asking regarding responsible applications of AI:

- How can we (humans) make sure that the machines are not planning to take over?

- Will my role in the workforce become obsolete because of AI?

- Will AI algorithms make fair and unbiased decisions about consumers and citizens like me?

- How can I trust an AI output if I do not understand how it works?

- Will my personal information be used by AI algorithms without my consent?

AI is expected to be one of the leading economic drivers of our time, and Canada has the opportunity and responsibility to be a global leader. As a country, we have the research strength, talent pool, and startups to capitalize, but that is not enough if we want to lead in an AI-driven world and shape what it might look like. True leadership which means taking steps now to establish a world-class AI ecosystem in Canada, is required.

AI is no longer on the horizon. It is here now, and is already having a profound impact on how we live, work, and do business. In fact, most statistical methods behind various AI algorithms have been around for decades. The questions citizens and consumers may have concerning AI means that businesses need to consider the ethical implications and underlying risks throughout the life cycle of an AI application, and to have a clear strategy on how to evaluate and balance the risks and benefits of implementing AI. Executives will face challenging decisions about how AI applications should be built, what values should be upheld, and whether they should be built in the first place. While ethics are contextual and the perception of them depends in large part on geography, culture, social norms, organizational values and more, they cannot be ignored. Companies need to be intentional in the way they address the numerous ethical implications of AI solutions and how they will respond to unintended consequences.

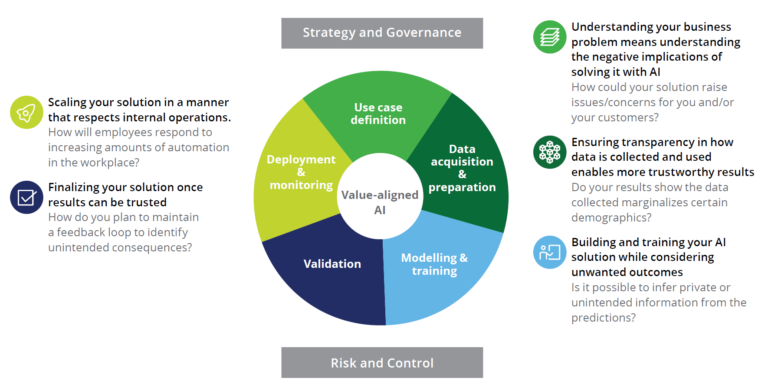

Ethics, both implicit and explicit, have a role at every stage of the AI life cycle. Everyone involved in AI development must be responsible for identifying and responding to ethical concerns. As organizations accelerate their adoption of AI technologies, they must address the various ethical questions that can arise throughout the entire development life cycle.

As an example, most organizations are working to ensure data privacy is protected and datasets are unbiased, but these challenges are just the tip of the iceberg. Ethical issues are often missed because of ambiguity about what ethics mean and the lack of accountability about addressing them. For example, consider the following ethical dilemma for an autonomous car: if it must hit a person, should the autonomous car hit a child or an elderly person? In these cases, the way forward is unclear and not everyone agrees as many moral frameworks could apply. Questions related to AI’s purpose and values, e.g, where and how it should be used, fall into this category, as do questions about the future nature of collaboration between AI and humans in the workplace. These are longer-term challenges that will likely require new forms of collaboration and discussion to address them. At the same time, this is not the first time that technology has hit up against profound questions.

The following framework can be used to consider ethical and responsible applications to help organizations adopt AI and to use it responsibly.

The management of AI ethics cannot be a periodic, point-in-time exercise. It requires continuous support and ongoing monitoring. Without a holistic perspective of the AI life cycle and the different moral and organizational barriers AI could present, organizations can open themselves to fundamental threats to their operations. Identifying and categorizing ethical issues is one of the main challenges an organization will face concerning AI. Understanding the range of concerns is the first step to addressing and mitigating the problem.

The bottom line? Organizations need to decide how they will identify and manage the ethical considerations around AI if they want to benefit from these technologies and ensure their long-term success.

*Four industrial revolutions are: water and steam to mechanize production, electric power to create mass production, electronics and information technology to automate production respectively, and digital revolution respectively.